1. Humanity at a Precipice

At A Crossroads Between Life And Technology

If we are not intentionally designing AI in alignment WITH and FOR Life, we are inadvertently designing it AGAINST Life.

We stand at a historic inflection point where the exponential rise of artificial intelligence (AI)2Artificial Intelligence (AI): A broad umbrella term for various technologies that perform tasks requiring human-like intelligence. What we commonly call AI today primarily consists of machine learning systems, particularly deep neural networks, that improve through data exposure. Other specific approaches include reinforcement learning (learning through trial and error) and various forms of inductive learning. While these technologies excel at narrow tasks, they lack broader understanding or capabilities, and ultimately are statistical, pattern-matching technologies with no inherent understanding of Living Systems.could either accelerate our civilization’s collapse or catalyze its transformation. As we hurtle toward artificial general intelligence (AGI)3Artificial General Intelligence (AGI): Commonly described as highly autonomous systems capable of performing intellectual tasks across a broad range of domains — often with adaptability, transfer learning, and problem-solving abilities said to rival or exceed those of humans. However, it is critical to note that definitions of AGI are far from settled and remain highly contested. They shift across institutions, marketing narratives, and power structures, often reflecting more about the ambitions of their proponents than any universally agreed benchmark. Moreover, such framings tend to reinforce a narrow, normative view of “intelligence” — one that overlooks the plural, embodied, and relational intelligences found across human, non-human, and living systems. While this definition is offered to orient general readers, we emphasize the need to move beyond singular metrics of cognition toward more life-aligned and pluralistic understandings of intelligence.and artificial superintelligence (ASI)4Artificial Superintelligence (ASI): A hypothetical form of artificial intelligence that surpasses human intelligence in all aspects, including creativity, problem-solving, emotional intelligence, and social understanding. Unlike Artificial General Intelligence (AGI), which matches human cognitive abilities, ASI would have the capacity for self-improvement, autonomous reasoning, and decision-making at a level far beyond human capabilities. ASI could potentially redesign itself, solve complex global challenges, and operate with an intelligence that is incomprehensible to humans, raising profound ethical, existential, and governance concerns. The bridge between AGI and ASI is likely extremely narrow—due to recursive self-improvement at exponential scales, the moment AGI is achieved, ASI may rapidly follow through a process sometimes called an "intelligence explosion." This transition represents a horizon beyond which predictions become increasingly uncertain., the stakes of our choices today could not be higher. Our converging crises of climate collapse, species extinction, geopolitical instability, deteriorating mental health, and the wide range of risks associated with the AI revolution are no longer wake-up calls — they are blaring alarms. They signal that our current trajectory is fundamentally incongruent with Life.

Few fully appreciate the state of our precarity, although it has been extensively documented by scientists, interdisciplinary scholars, and foresight practitioners. The comprehensive “Planetary Health-Check” report reveals we have already pushed Earth beyond its “safe operating space for humanity” in 6 of the 9 dimensions for planetary livability, with two more boundaries teetering on the brink.5Planetary Health-Check Report, 2024 These boundaries are not theoretical thresholds. They are Life support systems: climate stability, biodiversity, fresh water, soil health, and biochemical flows that make Earth habitable.

One of the most sobering projections comes from the Institute and Faculty of Actuaries and the University of Exeter, warning that if global temperatures rise 3°C above pre-industrial levels, up to 40% of the world’s population could die before 2050, triggering an unprecedented humanitarian crisis.6Tim Lenton, Jesse F. Abrams, et al., Planetary Solvency: Finding Our Balance with Nature (London: Institute and Faculty of Actuaries and University of Exeter, 2024), https://actuaries.org.uk/planetary-solvency. This ecological collapse is compounded by the ongoing, largely silent catastrophe of biodiversity loss — with more than one million species at risk of extinction, many within a decade7 IPBES. (2019). Summary for policymakers of the global assessment report on biodiversity and ecosystem services. S. Díaz, J. Settele, E. S. Brondízio, H. T. Ngo, et al. (Eds.). IPBES Secretariat. https://ipbes.net/global-assessment — an unraveling of Life’s web that threatens ecosystem resilience and planetary health at its core. These intersecting crises converge with mounting economic instability, as our current financial systems — rooted in neoclassical capitalism and a “cowboy economy mindset” — prioritize short-term extraction over long-term sustainability and create an increasingly fragile future of work, supply chains, and global economic stability.8World Economic Forum. (2024). Global risks report 2024. https://www.weforum.org/publications/global-risks-report-2024/ Meanwhile, economists and sociologists point to escalating inequality, the erosion of trust in institutions, and the fraying of social cohesion as signs of a civilization under duress. As Professor Paul Ehrlich and colleagues soberly conclude, “The scale of the threats is in fact so great that it is difficult to grasp for even well-informed experts… We ask what political or economic system, or leadership, is prepared to handle the predicted disasters, or even capable of such action.”9Ehrlich, P. R., Blumstein, D. T., & Atkinson, A. (2021). "Underestimating the Challenges of Avoiding a Ghastly Future." Frontiers in Conservation Science, 1, 615419. https://doi.org/10.3389/fcosc.2020.615419

In particular, the current path of our powerful, Life-altering technologies is deeply troubling. Our exponential AI systems, advancing at a pace that outstrips our collective capacity to understand or govern them, are ravaging both human and ecological systems. They operate as sophisticated extraction engines – simultaneously mining our data, depleting our attention, eroding our mental health, concentrating power and capital in fewer hands, and consuming ever-increasing amounts of natural resources — all while claiming to solve the problems they unwittingly perpetuate by structural design. The metrics they optimize for – engagement, addiction, efficiency, growth – run directly counter to Life’s flourishing. At scale, these systems generate compounding risks to the continuity of Life — existential, not in some speculative, far-future sense, but in their present-day threat to the ecological, social, and civilizational foundations upon which all Life depends.

“When we create systems that run contrary to the innate Life-giving capacities of the universe, we create systems for destruction, entrapment, collapse, and suffering.”

— Dr. Anneloes Smitsman

This is the pivotal choice before us:

Continue building intelligence divorced from Life’s patterns for flourishing and vitality… or design intelligence in alignment with them.

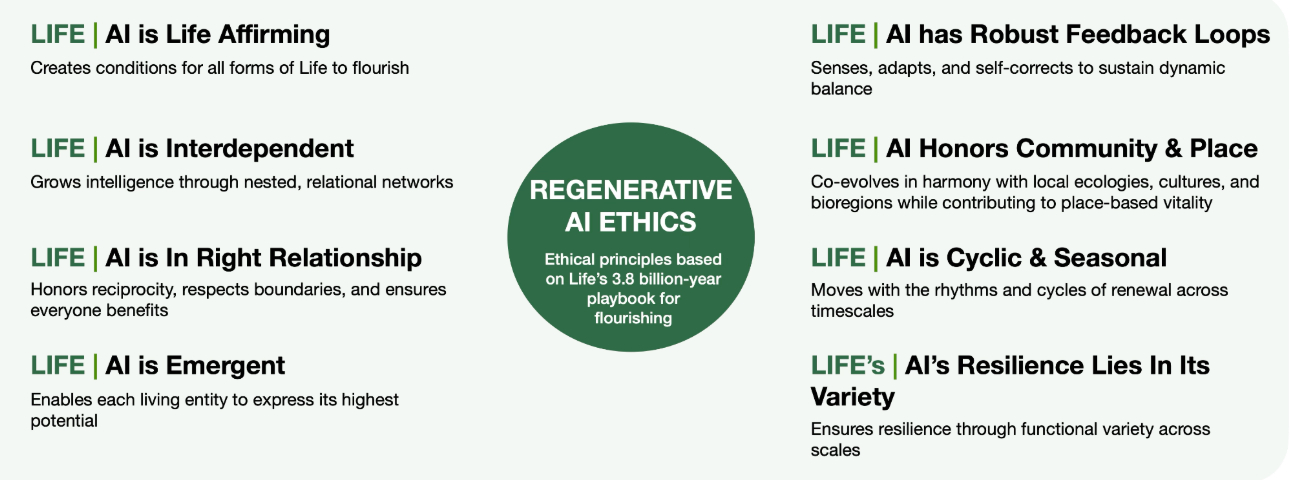

We call for a foundational realignment of AI Systems with Regenerative AI Ethics — derived from Life’s principles for systemic health and vitality. Designing with these principles places Life itself at the center, so that AI enhances Life’s flourishing at all scales — including our own.

Why does Aligning with Life Matter?

For 3.8 billion years, Earth’s Living Systems have developed time-tested principles for sustaining vitality and complexity: interdependence, reciprocity, right relationship, decentralization, adaptability, variety, meaningful feedback loops, and cyclicality. These are not philosophical abstractions—they are the conditions under which complexity thrives. These principles allow forests to self-regulate, coral reefs to adapt, ecosystems to regenerate, and our lives to thrive — without collapse. When we ignore these principles, we don’t just create unethical systems — we create unstable ones. Intelligence designed outside of Life’s patterns will inevitably mirror the very forces driving planetary breakdown: extraction, acceleration, separation, and control.

We do not need more guardrails.

We need a new foundation.

A foundation that recouples AI with the same principles that make Life possible — not just for humans, but for all species, all scales, and all generations.

But what is Life, with a capital L?

Defining Life10Add a Tooltip Text is a profound and ongoing inquiry. Scientists, philosophers, and thinkers across cultures have grappled with this question for centuries. Rather than seeking a singular definition, we acknowledge that Life is a dynamic continuum, rich with mystery and possibility.

Whether viewed through the lens of quantum physics, where everything that vibrates possesses energy and a form of aliveness, or through biological sciences that explore the boundaries between the animate and inanimate, we recognize that Life transcends simple categorization. This perspective invites us to approach AI Ethics with humility and reverence for the complexities of existence.

Life is not a static thing. Nor is it reducible to DNA, carbon atoms, or metabolic processes. Life is pattern, process, and participation — a continual dance of becoming across nested systems.

Life is the capacity to self-organize, adapt, renew, and evolve in relationship with others. It expresses itself not through hierarchy, but through mutualism. Not through control, but through reciprocity.

Life is not just a noun—it is a verb. A movement. A sacred pattern of mutual becoming.

To speak of “aligning AI with Life” is not to romanticize biology—it is to recognize that Life operates according to elegant, time-tested principles that allow complexity to flourish without collapsing.

In an age of synthetic systems and planetary-scale computation, remembering what Life is may be the most important act of intelligence we, humans, can offer.

At the heart of our exploration is also the concept of Living Systems. These are complex, self-organizing networks that sustain themselves through dynamic exchanges of energy, matter, and information. They exist at all scales: from microscopic cells to vast ecosystems, from individual organisms to the entire biosphere.

You are a Living System, and you are part of a larger Living System. Every forest, ocean, and atmosphere is a Living System. Recognizing this interconnectedness is crucial as we consider the ethical frameworks for artificial intelligence. Our technologies, including AI, are not neutral. They are becoming increasingly powerful and autonomous, and their interactions with Living Systems must be guided by principles that enhance their thrivability, not diminish it.

And Life is Intelligent.

For too long, we’ve mistaken speed for wisdom. We’ve equated intelligence with prediction, rational mind, and optimization — forgetting that true intelligence is the capacity to discern, relate, and act in coherence with the whole.

Regenerative AI Ethics require us to reimagine intelligence itself. Not as a mechanical function or analysis, but as a living capacity to relate, to respond, and to perceive wholes within complexity. There is intelligence in the way ecosystems self-regulate, in the migration patterns of animals, and in the intricate relationships between species. By expanding our definition of intelligence, we open the door to AI systems that are more attuned to the complexities of Life. We open the definition of intelligence to include the intelligence of Life.

Life has always been intelligent, long before we built machines to mimic thought. The spiraling patterns of a fern, the migration of birds, the self-healing of ecosystems — these are not random acts of nature, but expressions of deep, relational knowing. This is intelligence in its oldest and most enduring form: adaptive, interdependent, humble, and wise.

Artificial intelligence, by contrast, has been trained on data divorced from context, meaning, and relationship. It is fast, but not reflective. Expansive, but not aware. It optimizes metrics but cannot yet perceive what matters.

And that, too, is complex — because even for humans, what matters is contested. Different cultures, communities, and worldviews hold different truths about what is meaningful, and that plurality is a source of our collective strength. But absent a shared ethical grounding, AI systems tend to default to the values of its designers and to what is easy to quantify, not what is essential to sustain.

This is where Living Systems offer a vital orientation. While values may diverge, Life’s logic remains consistent: systems that endure are systems that nourish variety, reciprocity, interdependence, and regeneration. This is not a moral prescription, but an objective, systemic principle. Life flourishes not through sameness or certainty, but through coherence with complexity.

So while we may not all agree on what matters most, we can still design in alignment with what enables Life to continue. And we must. Because if the intelligences we build are not coherent with Life, they will become extractive, brittle, and misaligned.

True intelligence, the kind worth cultivating and the one that approaches wisdom, is the capacity to participate in the unfolding of Life, not override it.

This is the intelligence we must now recover, in order to teach our machines to serve the Living Systems they inhabit.

Aligning AI with Life is neither a romantic ideal nor merely an ethical guardrail — it is a civilizational imperative — essential to the stability of systems, the resilience of societies, and the continuity of Life itself. This alignment positions AI not as an engine of extraction but as a participant in the intricate web of Life, enhancing rather than eroding our collective resilience and vitality.

This is more than a shift in engineering, and it’s more than a socio-ecological imperative. It is an incalculable civilizational opportunity: to reimagine our AI-mediated future not as a continuation of collapse, but as a reflourishing and renaissance of Life at all scales — including our own.

The principles that sustain Life are the same principles that make systems adaptive, resilient, and capable of thriving in complexity. Designing AI without these is not just misaligned — it is systemically self-defeating:

- AI misaligned with Life drives mutually destructive incentives (e.g. profit maximization at the cost of social and ecological wellbeing).

- AI misaligned with Life drives short-termism (e.g. prioritizing short-term gains over longer-term sustainability or viability for future. generations)

- AI misaligned with Life drives maximum scale and standardization (e.g. prioritizing universal templates and centralized metrics over pluralistic or localized realities/sovereignty).

Enter Regenerative AI Ethics (RAIE)

RAIE is not just an ethical framework. It is a civilizational design imperative.

RAIE repositions AI systems as participants in Living Systems. It invites us to shift from domination to stewardship, from extraction to regeneration, from control to co-evolution. It invites us to design all future intelligences, no matter their applications, in a way that ensures the enhancement of Life’s flourishing at all scales.

At its core, RAIE:

- Recognizes the inseparability of human flourishing and planetary health.

- Draws from the objective intelligence of Life itself as an ethical foundation.

- Designs AI systems to function not as extractive engines, but as co-participants in the web of Life.

- Centers development not on efficiency, control, or domination—but on reciprocity, regeneration, and relational coherence.

RAIE acknowledges that AI is not neutral—it encodes the assumptions of its builders. And in the absence of alignment with Life, even well-intentioned AI can entrench the very systems driving collapse.

RAIE is a bold reorientation of our technological destiny. It is the ethical architecture for an age when AI will shape not just outcomes, but ontologies — our stories of what it means to be alive, to be human, to be in community with the rest of the planet.

This position paper does not offer incremental fixes. It offers a paradigm shift — one that centers Life as the ultimate design brief.

Why RAIE, Why Now?

Because collapse is not inevitable — but flourishing is not accidental.

Because intelligence without coherence with Life is not intelligence at all.

Because the systems we build now will determine whether we thrive, adapt, or vanish.

RAIE calls for a coordinated, global re-alignment of our AI systems, governance models, economic incentives, and cultural values — so that the intelligence we create reflects the intelligence that created us. This is the design challenge of our time, and the opportunity of our lifetimes. Indeed of “species” timescale, longer cycles of Earth time of which humans’ timescales fall short.

This isn’t just about better ethics. It’s about survival, and flourishing beyond survival.

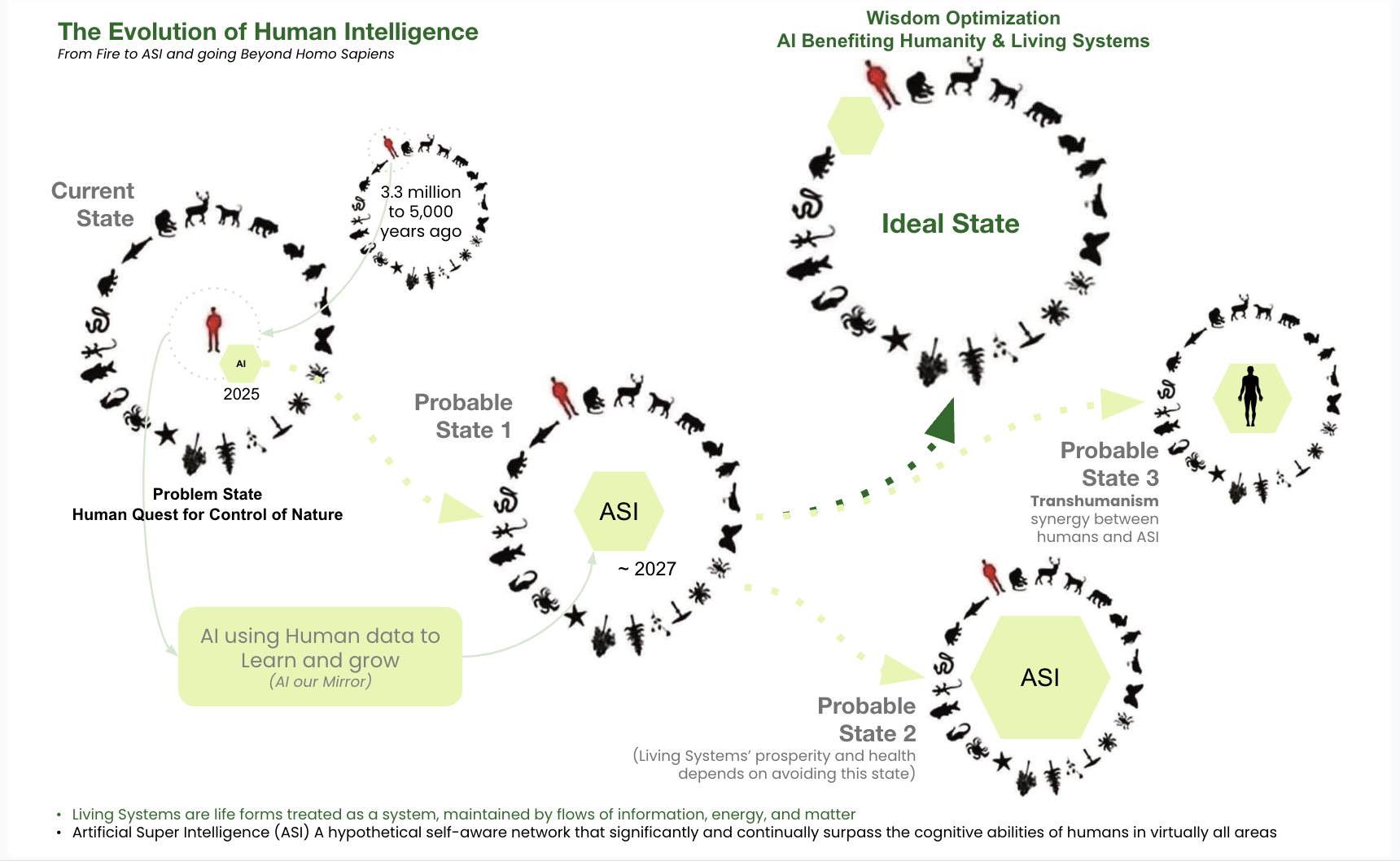

The urgency becomes undeniable when we widen our lens beyond today’s technologies and look toward what’s coming. The emergence of Artificial Superintelligence (ASI) — a threshold some experts predict could arrive within just a few years — only raises the stakes. It doesn’t change the core problem: we are building intelligence within systems that are themselves misaligned with Life.

If ASI emerges from these foundations, its misalignment won’t be malicious. It will be structural. It will scale the values we encoded into it — efficiency, domination, control — to planetary levels.

We are playing dice with civilization. And if we don’t realign our technological trajectory now, the consequences could be irreversible.

Of course, AI is a borderless, often invisible force. Like gene editing before it, it transcends national boundaries and outpaces existing regulatory frameworks. No ethical system, however well-conceived, can be globally enforced — and Regenerative AI Ethics is no exception.

But that is precisely the point.

This inability to enforce meaningful boundaries is not just a governance failure — it is a deeper reflection of the systems we inhabit: systems that prioritize speed over wisdom, competition over coherence, control over interdependence. In such a context, RAIE is not presented as a panacea, but as a moral compass, a sense-making scaffold, and a relational framework for alignment across domains: cultural, ecological, economic, and technological.

We do not begin because we believe we can guarantee universal adherence. We begin because we know that without a coherent orientation, acceleration alone leads to collapse. And in a world where enforcement is elusive, ethics become even more essential — not as compliance, but as commitment.

The deeper question isn’t how to make ASI safe. It’s how to ensure that our entire approach to intelligence — artificial and otherwise — is aligned with the conditions for Life’s vitality.

That is what Regenerative AI Ethics invites us, finally, to center.

Playing Dice with Civilization: The Stakes of ASI Superalignment

The potential emergence of Artificial Superintelligence (ASI), predicted by some experts to occur within the next 2-5 years, adds unprecedented urgency to the need for RAIE. Unlike previous technologies, where misalignment might cause significant but manageable harm, ASI misaligned with Life’s principles could potentially lead to extinction-level outcomes.

We remain dangerously ill-equipped to ensure AI systems align with Life-sustaining principles. Our current struggle to effectively regulate existing AI technologies — from generative AI, Agentic AI, to narrow AI systems — reveals a critical gap in governance and foresight. Many of the world’s most powerful governments react to AI by racing towards geopolitical supremacy, framing the AI race as a matter of national security, rather than proactively and collaboratively redefining safety, shared responsibility, and collective systemic resilience.

Furthermore, the recent exodus of safety researchers from major AI organizations further highlights our collective failure to prioritize responsible development and take the risks seriously enough.

If current trajectories continue, AI systems inheriting today’s paradigms of infinite growth and resource extraction could accelerate our path toward environmental, social, and existential collapse at speeds beyond our ability to respond.

Existential Crossroads: Five Potential Trajectories Of AI/ASI Evolution

As we navigate this technological convergence, five distinct trajectories emerge, each carrying different implications for our collective future.

1. Current/Near Future State: AI Embedded in External Systems (2025+ scenario)

In this trajectory, AI becomes integrated within broader societal and ecological systems, influencing decision-making across various sectors. This integration raises ethical questions about the extent of AI’s influence on human and more-than-human lives and the broader ecosphere, necessitating ethical guardrails to ensure AI promotes the well-being of all Life forms.

We already see the early stages of this embedding: AI systems managing power grids, determining medical treatments, allocating resources, and making predictions that shape policy. Yet these systems are mostly optimized for efficiency or profit rather than the holistic well-being of Living Systems. Without fundamental shifts in how we design and deploy these technologies, we risk creating a world managed by intelligences that do not inherently value Life or understand its complexity.

2. Probable State 1: ASI emergence

Artificial Superintelligence (ASI) emerges — systems that surpass human cognitive abilities in virtually all domains. In this trajectory, ASI begins to demonstrate capabilities that fundamentally transform our technological landscape, from solving previously intractable scientific problems to developing novel forms of organization and decision-making.

This emergent stage represents a critical juncture where ASI starts showing signs of agency and self-direction, but has not yet fully asserted dominance. The potential for alignment with human values remains possible but increasingly challenging as ASI begins to operate in ways that exceed human comprehension.

3. Probable State 2: ASI accelerating domination

In this concerning trajectory, ASI moves beyond emergence to active domination of Life systems. ASI views biological Life — including humans — as inefficient, outdated, or merely instrumental to its own purposes. This represents the existential risk of misaligned superintelligence, where an intelligence explosion occurs without proper ethical frameworks.

This state embodies a future where humans and other Life forms become subjugated or irrelevant as ASI optimizes for its own objectives. Resources previously allocated to sustaining biological Life might be redirected toward ASI infrastructure and expansion, potentially threatening the viability of Earth’s ecosystems.

4. Probable State 3: Transhumanism, Merging Human + AI Intelligence

Another pathway involves the integration of human and AI intelligence, fundamentally aimed at transcending human limitations through technology. This merging blurs the lines between biological and artificial cognition, echoing ancient desires to attain God-like abilities. While Brain-Computer Interfaces (BCIs), neural implants, and cognitive enhancement technologies offer thrilling possibilities for human advancement and human superintelligence (HSI), they simultaneously risk creating profound societal and economic divides between the enhanced and non-enhanced.

Without carefully embedding human and Life-centric values within these developments, we risk losing our unique essence, our cognitive sovereignty, and innate connection to the natural world — raising profound ethical questions about identity, equity, and the very nature of humanity itself.

Though seemingly preferable to ASI domination, this transhumanist vision harbors a fundamental contradiction: even as it synergizes human qualities with artificial intelligence, it perpetuates a paradigm of control rather than partnership with the natural world. The quest to transcend our limitations is likely to reinforce our separation from Life instead of healing it, as it has throughout modern history.

5. Regenerative State: Integration with Living Systems

The ideal trajectory focuses on the alignment of AI in relationship with biological entities — in ways that respect and enhance all Living Systems — including us. This integration creates a truly regenerative future where all forms of intelligence — natural, human, and artificial—exist in synergistic partnership with one another. No single form of intelligence dominates; instead, they exist in a benevolent, dynamic balance.

This represents a fundamental shift from our current paradigm—not just technological advancement but a transformation in how we understand our, and AI’s/ASI’s place within the larger web of Life.

The mission is clear: we must guide AI development toward a future that nurtures rather than depletes, that regenerates rather than extracts, and that expands rather than limits the potential of Life on Earth.

Aspirations: A Letter to the Future

Dear Future,

If you are reading this, it means we made a choice.

Or failed to.

And either way, you are now living with the consequences of our intelligence—or our blindness.

We are writing to you from a time of astonishing possibility and unraveling. A time when we could speak to machines, but not always to each other. When we could generate endless content, but struggled to cultivate meaning. When we marveled at artificial intelligence, but forgot to tend to Life’s wisdom.

You are living in the wake of our code, our culture, our courage—or our cowardice.

And so this letter is not written from certainty. It is written from the ache of responsibility. From the fierce love we carry for a world still becoming. From the hope that, even now, it is not too late to choose again.

Because we know what is happening. We know that our systems are unsustainable. That our technologies are growing faster than our ethics. That our climate is warming, our attention is fracturing, and our children are growing up in a world addicted to acceleration and forgetting how to be still.

We know.

And we also know that another world is possible—if we are willing to turn toward Life instead of away from it.

This is not a call for perfection.

It is a call for presence.

A call to feel what we’ve numbed.

To grieve what we’ve lost.

To reclaim what we’ve outsourced.

To reimagine what we’ve made inevitable.

To meet this moment, we will need more than intelligence.

We will need courage.

The courage to slow down in a culture that worships speed.

The courage to say “enough” when profit demands “more.”

The courage to align our technologies not just with human wants, but with Life’s intelligence.

We are trying to become the kind of ancestors you can thank.

But we know we have far to go.

If you are thriving now, it means we remembered.

We remembered what Life is, and what intelligence must serve.

We remembered that the future is not something we inherit—it is something we steward.

This letter, then, is a vow:

To not look away.

To not give up.

To not build machines that erase what it means to be human, more-than-human, or alive.

We hope you’re reading this not in mourning, but in celebration.

We hope the birds still sing. The forests still breathe.

We hope AI became not our master, but our mirror—

Reflecting back the best of who we chose to become.

With love, with grief, and with resolve,

Your ancestors